The global artificial intelligence (AI) is in full swing. Its core driving force is not only a breakthrough in performance of computing chips. The innovation and development of memory technology are increasingly becoming a key battleground for the popularization of AI, the improvement of efficiency and the development of industry. From personal computers to data centers, from cost challenges to national strategies, memory industry is undergoing an unprecedented change.

AI popularization memory challenge, high cost and HBM bottles

At present, the rapid development of AI has brought huge potential to all walks of life, but it is also accompanied by significant challenges. Pan Jiancheng, executive director of Group Electronics, recently pointed out that many companies currently have misunderstandings about AI, especially in terms of cost. AI is really expensive, and the main reason lies in insufficient memory rather than simple computing power. The operation of large language models is often limited by the capacity of GPU and DRAM, resulting in high overall investment costs. Especially when the memory market is tightly supply and demand, how to reduce the door is a decisive factor in the popularization of AI.

Pan Jiancheng recalled that his industry had high expectations for AI PC two years ago, but has not yet seen explosive growth. The main reason is also due to the high hardware costs and insufficient application scenarios. AI PCs need additional configurations of GPUs, NPUs and memory with larger capacity, which leads to the difficulty of price sharing and hinders the expansion of market size and the formation of application ecosystems. The key to promoting the popularization of AI PCs lies in the price-making of the hardware platform.

On the other hand, the supply of high-frequency wide memory (HBM) is also facing challenges for high-performance AI computing. In order to actively invest in the research and development of AI memory, China is to understand the HBM bottlenecks that are facing the development of AI hardware in China, and to fight against Western technological restrictions and reduce its dependence on HBM. Due to the large scale constraints of HBM supply due to ground politics, this highlights the vulnerability of the current memory supply chain and the urgency to seek alternatives.

Memory innovation, AI acceleration strategy centered on SSDWith the memory cost, HBM bottlenecks, and the future popularity and performance improvement of edge AI, global technology giants are turning their attention to innovative applications of flash memory and solid-state hard drives (SSDs), trying to play a more critical role in AI computing.

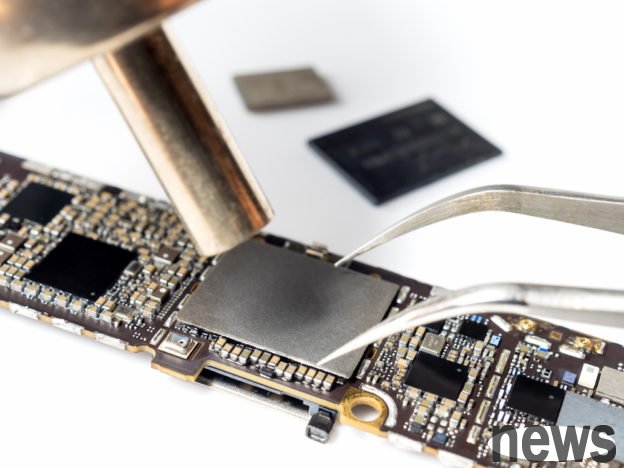

Among them, Group Electronics proposed a Flash Management (Flash Memory Management) solution for high-cost problems. This technology can transform part of the storage space into cache memory required for AI computing through a special SSD design. Its biggest advantage is that it does not require a significant change in the existing hardware architecture, and only needs to change the storage device, which can improve the performance of AI recommendations and significantly reduce the dependence on high-priced DRAM.

Pan Jiancheng expected that with the popularization of this solution, more than 300 million PCs shipped worldwide each year can be directly converted into AI PCs, bringing the ecological and prosperous effect of the smart phone era. This will not only stimulate the development of new applications, but will also promote the growth of Taiwan’s ODM, OEM and component suppliers. This aims to bring AI technology into daily life and to make Taiwan stable in a new wave of industrial chains.

China is a strategy for AI memory and is extremely refusing to rely on HBMIn addition to group electronics, China's technology manufacturer Hua is also showing its ambitions in the field of AI memory. Foreign media reports pointed out that China is developing a new AI memory to effectively replace the current HBM solution in China's AI hardware development. This breakthrough AI memory may appear as solid-state hard drives (SSDs) and deeply optimize the data center environment.

The AI SSD for Hua is designed to overcome the limitations of existing memory capacity and provide high-performance and unconnected capacity expansion. The report pointed out that it is expected to match HBM in terms of efficiency and efficiency, becoming a viable alternative. This research and development will provide a stable and independent memory supply chain for AI industries in China, thereby relieving the dependence of international supply chains to meet China's national strategic goal of achieving 100% self-sufficient AI chips by 2027.

In addition, Hua has also launched a Uniform Cache Manager (UCM) software suite, which can accelerate the training of large language models (LLM) across multiple memory systems including HBM, standard DRAM and SSD, effectively expanding the memory usage range of AI-related work. This has become a potential solution for HBM hardware limitations without introducing new capability hardware.

Viagra cooperates with NVIDIA to innovate GPU direct SSD serversIn terms of large traditional memory factories, the cooperation between Kioxia and GPU Longtou NVIDIA (NVIDIA) represents a huge change in AI server storage technology. The goal of the two-party cooperative development is to launch a solid-state hard drive with a reading speed of nearly a hundred times faster than traditional SSDs by 2027. This technological breakthrough will mainly serve the server market required for generative AI computing.

Different from traditional SSDs, the way they need to connect GPUs by CPUs, is a new SSD developed in cooperation with NVIDIA, which will achieve direct connection and data exchange of GPUs. This direct connection mode can greatly improve data transmission efficiency, which is important for AI computing. In order to meet the speed requirements, the SSD's random reading performance will reach 100 times the rate of IOPS per second (Input Output Per Second), which is about 100 times that of traditional products. Considering that NVIDIA's SSD requirements for connected GPUs reach 200 million IOPS, the project was achieved through two SSDs in concert. This innovative product is expected to support the next generation standard "PCIe 7.0" of the PCIe (PCI Express) interface, laying the foundation for high-speed transmission.

System predicts that the development goal of this high-speed SSD is not only to expand the GPU memory capacity, but more importantly, to replace some of the HBMs currently used in AI servers. This development is expected to provide AI computing with more cost-effective, highly flexible and easy to expand memory solutions. The partner of NVIDIA is telling the innovation of AI server storage technology, which will accelerate the development and application of AI technology generated.

The innovation and development of memory is gradually defining the future of AIThe trend of memory development in the AI era is moving from a single pure capacity expansion to a deeper level of architectural innovation and ecological coordination. From group connection to accelerate the popularization of AI PCs through Flash Management solutions, to China's efforts to independently replace HBM, and to the fact that NVIDIA has worked to create GPU-connected SSDs, the core goal of these efforts is to overcome the limitations of traditional memory and release the power of AI with lower cost, higher efficiency and broader applicability.

With these advances in memory technology, AI will no longer be a specialty to a few large enterprises, but can truly enter the daily life and become a tool available to everyone. The memory of the future is not only a storage unit that stores data, but also a cornerstone and accelerator of AI intelligence. This wave of memory innovation will profoundly affect the global science and technology industry and level the road to popularization and deep-level applications.